TorchServe¶

TorchServe is a performant, flexible and easy to use tool for serving PyTorch models in production.

What’s going on in TorchServe?

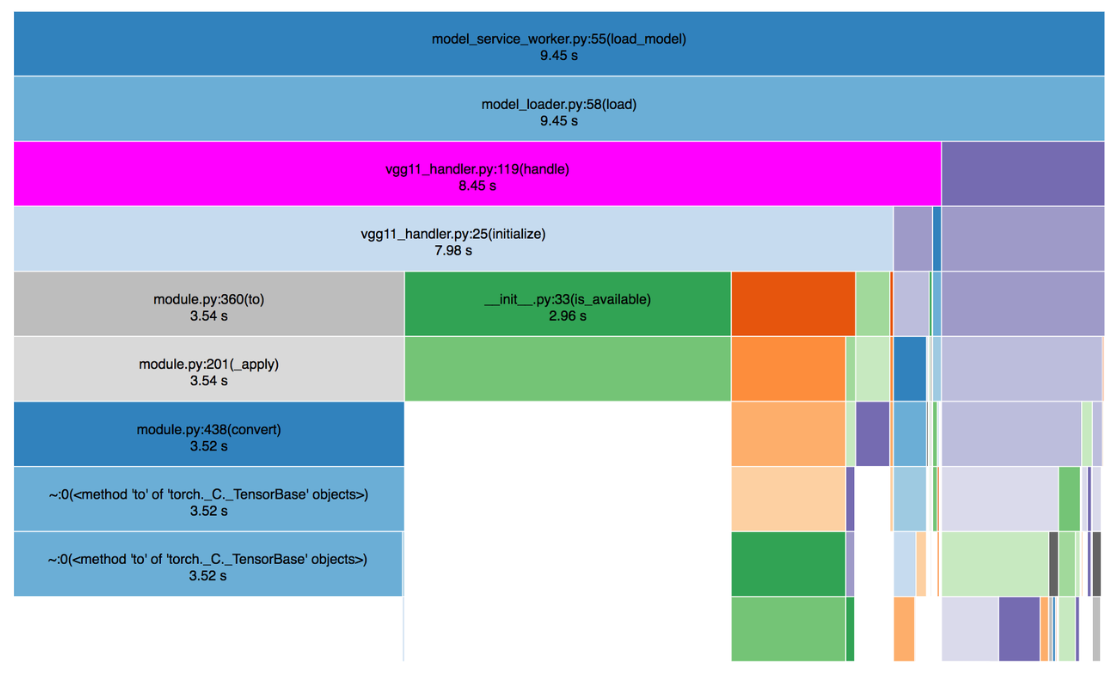

Grokking Intel CPU PyTorch performance from first principles: a TorchServe case study

Grokking Intel CPU PyTorch performance from first principles( Part 2): a TorchServe case study

Case Study: Amazon Ads Uses PyTorch and AWS Inferentia to Scale Models for Ads Processing

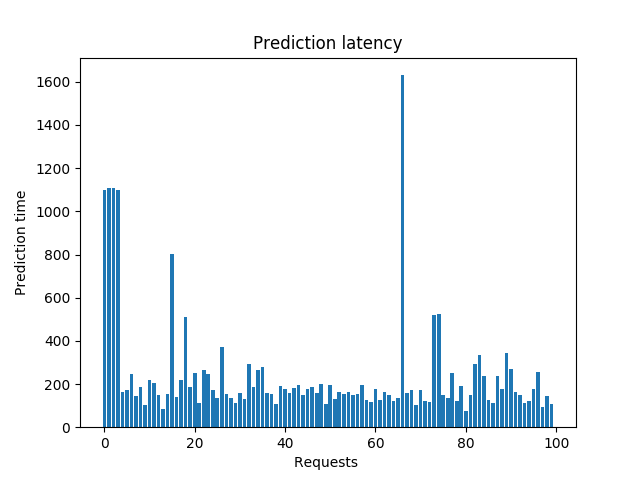

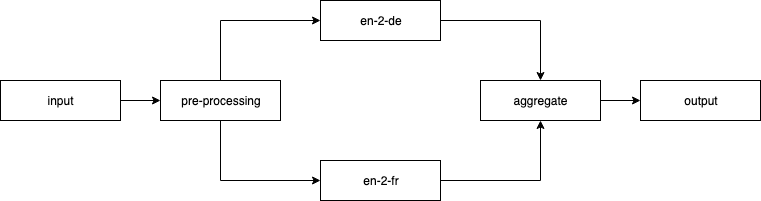

Optimize your inference jobs using dynamic batch inference with TorchServe on Amazon SageMaker

Evolution of Cresta’s machine learning architecture: Migration to AWS and PyTorch